Introducing 'EMO' by Alibaba Research: Transforming Photos into Realistic Talking and Singing Videos

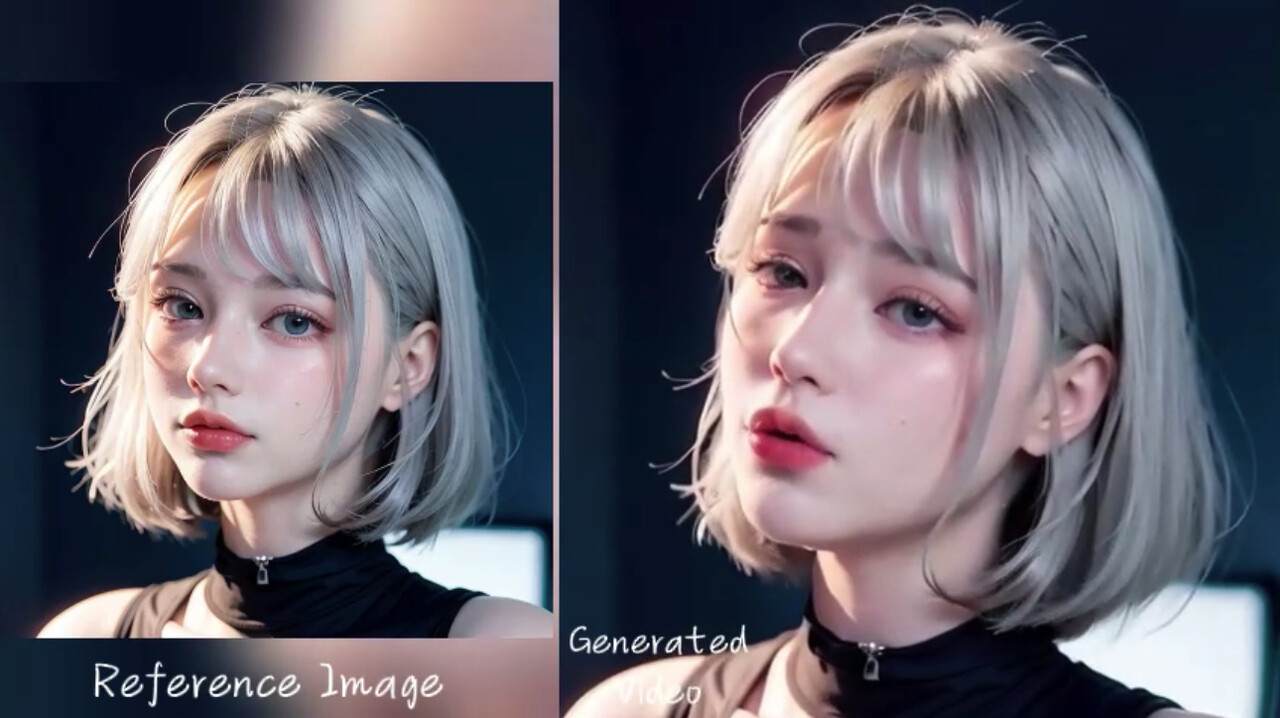

On the heels of Pika Labs releasing Lip Sync, researchers at Alibaba's Institute for Intelligent Computing, have published a new research paper which details a new artificial intelligence system called 'EMO,' short for 'Emote Portrait Alive,' which can animate a single portrait image, or photo and generate videos of the person talking or singing in a realistic fashion.

EMO: Emote Portrait Alive

The Alibaba research paper titled: "EMO: Emote Portrait Alive - Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions"

Alibaba Researchers: Linrui Tian, Qi Wang, Bang Zhang, Liefeng Bo

EMO Project: https://humanaigc.github.io/emote-portrait-alive/

EMO Research Paper: https://arxiv.org/abs/2402.17485

EMO PDF: https://arxiv.org/pdf/2402.17485.pdf

EMO GitHub: https://humanaigc.github.io/emote-portrait-alive/ https://github.com/HumanAIGC/EMO

"We proposed EMO, an expressive audio-driven portrait-video generation framework. Input a single reference image and the vocal audio, e.g. talking and singing, our method can generate vocal avatar videos with expressive facial expressions, and various head poses, meanwhile, we can generate videos with any duration depending on the length of input audio." - Alibaba EMO Researchers

What is EMO?

EMO is able to create smooth and expressive facial movements and head poses that closely match the nuances of the audio track input. This represents a huge advance in audio-driven talking head video generation, which has been a technological challenge.

EMO Generates Realistic Speaking Avatars

The EMO research paper says it is able to "establish an innovative talking head framework designed to capture a broad spectrum of realistic facial expressions, including nuanced micro-expressions, and to facilitate natural head movements, thereby imbuing generated head videos with an unparalleled level of expressiveness".

This represents a major advance in audio-driven talking head video generation, an area that has challenged AI researchers for years.

"EMO is a novel framework that utilizes a direct audio-to-video synthesis approach, bypassing the need for intermediate 3D models or facial landmarks. Our method ensures seamless frame transitions and consistent identity preservation throughout the video, resulting in highly expressive and lifelike animations." - Alibaba EMO Researchers

EMO Generates Vocal and Singing Avatars

EMO not only creates vocal avatar videos that feature expressive facial expressions and head poses for any duration based on the audio input, EMO also introduces the new concept of vocal avatar generation.

From just an image and an audio input, EMO can generate vocal avatar videos that show expressive facial expressions and head movements. As a controversial functionality for Hollywood, EMO is able to replicate the performance of famous songs or speak lines in various languages.

"Experimental results demonstrate that EMO is able to produce not only convincing speaking videos but also singing videos in various styles, significantly outperforming existing state-of-the art methodologies in terms of expressiveness and realism." - Alibaba EMO Researchers

EMO demonstrates promising accuracy and expressiveness. EMO not only supports multilingual and multicultural expressions but the model also excels in capturing fast-paced rhythms and conveying expressive movements synchronized with the audio.

EMO Animates Spoken Audio In Numerous Languages

EMO’s functionality goes beyond vocal and singing avatars. It can also animate spoken audio in numerous languages, bringing to life portraits of historical figures, artwork, and even AI-generated characters.

Controversially, this versatility allows for conversations with iconic figures or cross-actor performances, offering new creative avenues for character portrayal across different media and cultural contexts.

EMO creates new possibilities for engaging content creation, such as music videos or performances that require detailed synchronization between music and visual elements.

One item to note, is that Alibaba, the parent company of Alibaba Group owns Youku which is one of China's top online video and streaming service platforms.

EMO Summary:

Innovative Approach: EMO leverages an audio-to-video synthesis method that bypasses the need for intermediate 3D models or facial landmarks, enhancing realism and expressiveness in video generation.

New Methodology: Unlike previous methodologies relying on 3D face models or blend shapes, EMO directly converts audio waveforms into video frames, capturing subtle nuances and identity-specific quirks associated with natural speech.

Enhanced Expressiveness: The model produces not only convincing talking videos but also singing videos in various styles, significantly outperforming state-of-the-art methods in terms of expressiveness and realism.

Comprehensive Dataset: Utilizes a vast dataset encompassing over 250 hours of footage and more than 150 million images, covering a wide range of content and languages to train the model.

Superior Performance: Demonstrated through extensive experiments and user studies, EMO surpasses current methodologies across multiple metrics (FID, SyncNet, F-SIM, FVD), showcasing its ability to generate natural and expressive videos.

Methodology: Integrates stable control mechanisms for enhancing generation stability without compromising expressiveness, including a speed controller and a face region controller.

EMO Demo Videos

>> HumanAIGC-EMO:Emote Portrait Alive

Is EMO Similar to Pike Lab Lip Sync?

This week Pika announced the release of a new feature for its paying subscribers called #LipSync. LipSync allows users to add spoken dialog to their videos with AI-generated voices from separate generative audio startup ElevenLabs, while also adding matching animation to ensure the speaking characters’ mouths move in time with the dialog.

EMO Limitations

EMO does have its limitations. The actual quality of the input audio and reference image plays an important role, and in viewing, there is definitely an opportunity for improvement in the audio-visual synchronization and emotion recognition to enhance the realism and nuance of the generated videos

More Examples:

More Institute for Intelligent Computing, Alibaba Group Research:

Animate Anyone: Consistent and Controllable Image-to-Video Synthesis for Character Animation

Outfit Anyone: Ultra-high quality virtual try-on for Any Clothing and Any Person

VividTalk: One-Shot Audio-Driven Talking Head Generation Based on 3D Hybrid Prior

Cloth2Tex: A Customized Cloth Texture Generation Pipeline for 3D Virtual Try-On

Alibaba Platforms

Alibaba.com: A global B2B e-commerce platform connecting Chinese suppliers with international buyers.

AliExpress: A global retail service allowing consumers worldwide to buy products directly from wholesalers and manufacturers in China.

Taobao: A leading C2C and B2C platform in China for a wide range of consumer goods, comparable to eBay.

Tmall: A premium B2C platform in China for consumers to buy branded goods, serving the middle and upper class with high-quality products.

1688.com: A domestic B2B trading platform in China, focusing on providing wholesale and manufacturing goods.

Fliggy: Alibaba's travel service platform, offering bookings for flights, hotels, and vacation packages.

Lazada: A leading e-commerce platform in Southeast Asia, serving Indonesia, Malaysia, Philippines, Singapore, Thailand, and Vietnam.

Youku: One of China's top online video and streaming service platforms.

DingTalk: An enterprise communication and collaboration platform.

Alibaba Cloud: The cloud computing branch of Alibaba, offering a wide range of cloud services.

Ant Group: Alibaba’s fintech arm, operating Alipay, one of China's leading mobile and online payment platforms.

Cainiao: A logistics company optimizing the delivery process for e-commerce across China and globally.

For and insight into building LLMs in China, Binyuan Hui, a natural language processing researcher at Alibaba’s large language model team Qwen, shared his daily schedule on X, mirroring a post by OpenAI researcher Jason Wei that went viral recently.

Alibaba staffer offers a glimpse into building LLMs in China

#ai #alibaba #aivideo #emo #alibabagroupresearch #avatars

Private Lending Consultant

1wIt’s just awesome to see what AI can do!